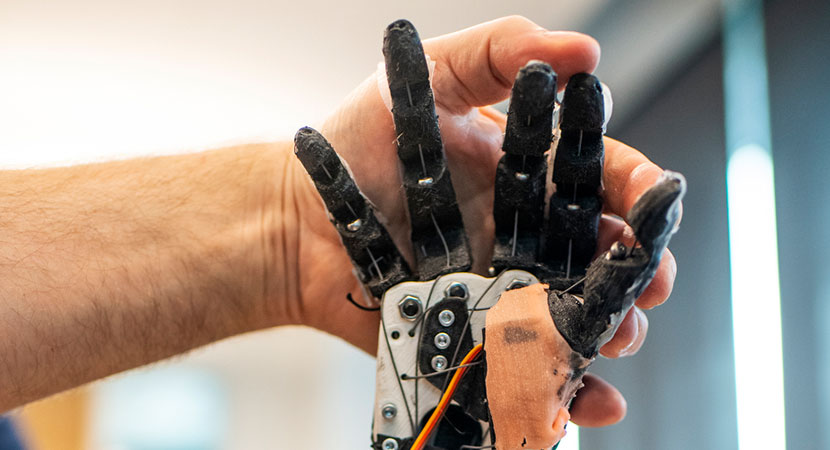

Samantha Johnson, a bioengineering graduate student at Northeastern University, has developed a robotic arm named TATUM (Tactile ASL Translational User Mechanism) that produces tactile sign language. The robot is designed to help deaf-blind individuals communicate independently by enabling them to feel the signs being made. Johnson’s inspiration came from a sign language course she took as an undergraduate, where she interacted with people from the Deaf-Blind Contact Center and realized the need for tactile sign language for those who are both deaf and blind.

Currently, the robot is in the early stages of development, with a focus on finger-spelling the American Manual Alphabet and basic words. The long-term goal is to make the robot fluent in American Sign Language and connect it to text-based communication systems, such as email, text messages, and social media. Johnson also aims to make the robot customizable to accommodate regional and cultural differences in sign language. The project holds promise for improving communication and independence for deaf-blind individuals in various settings.